A Psycopg 2 vs Psycopg3 (3.0.15) benchmark

If you are a pythonista and regularly store data in a database, you probably will have heard about sqlalchemy (the orm library) and psycopg2 (a driver for postgresql databases). I started looking into memory server memory issues resulting from large inserts (about 2 million rows) causing an ETL pipeline of mine to crash.

I had recently found out about psycopg3 which also supports server side binding and binary data transmission. The looked promising as this would potentially solve the memory problems that I started to run into.

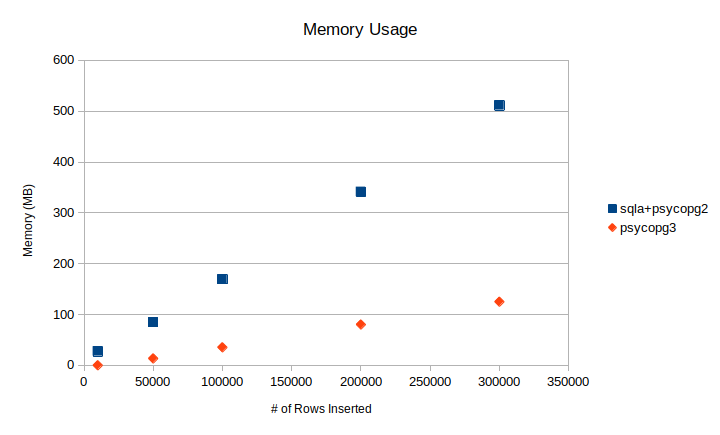

The memory problems faced were definitely solved while using psycopg3:

The data in the Memory Usage chart is generated from the table below:

| rows | sqla+psycopg2 | psycopg3 |

|---|---|---|

| 10000 | 27.54 | 0.00 |

| 50000 | 84.74 | 13.40 |

| 100000 | 169.39 | 35.32 |

| 200000 | 341.41 | 80.19 |

| 300000 | 511.55 | 125.04 |

Memory usage is specifically tracked when execute and insertion into the database is performed – a snapshot of the consumed memory before insertion is taken and then monitored while the insertion progresses.

In the data above, we generally see that executing sql queries with psycopg3 is 4~5x more memory efficient compared to using psycopg2 (ignoring the data point for 10,000 rows as I believe this to be an anonomly). Quite clearly, using 500MB of memory to insert 300k rows would result in > 3 GB memory being used when 2 million rows are inserted. Shrinking the insert memory footprint by using the binary type as provided by psycopg3 is advantageous in this case.

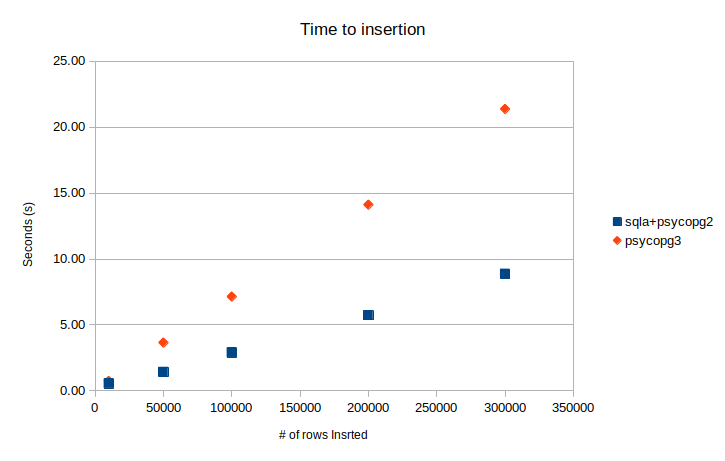

However, a different story is told when monitoring for insertion time:

The time to insertion chart is generated from the table below:

| rows | sqla+psycopg2 | psycopg3 |

|---|---|---|

| 10000 | 0.54 | 0.74 |

| 50000 | 1.43 | 3.66 |

| 100000 | 2.89 | 7.15 |

| 200000 | 5.74 | 14.12 |

| 300000 | 8.88 | 21.38 |

We see in this case that insertion time is negatively impacted on average by about 2.4~2.5x (ignoring the 10,000 row) data point.

As of this moment there is recognition that the executemany implementation for psycopg3 is not fully optimized yet at responses from the postgresql server is waited upon and that an update in psycopg 3.1.x should hopefully improve performance.

I'll report back again once a version of psycopg 3.1.x has been released.